Centralized Data Management for Plant Research: Accelerating Drug Discovery in 2025

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on implementing centralized data management in plant research facilities.

Centralized Data Management for Plant Research: Accelerating Drug Discovery in 2025

Abstract

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on implementing centralized data management in plant research facilities. It explores the foundational drivers—from overcoming data silos and ensuring regulatory compliance to enabling AI-driven discovery. The content delivers actionable methodologies for building robust data architectures, practical solutions for common data quality and integration challenges, and a framework for validating ROI through accelerated research cycles and improved collaboration. As the pharmaceutical industry experiences an unprecedented wave of new plant construction, this guide is essential for building a future-proof data foundation that turns research data into a strategic asset.

Why Centralize? The Imperative for Unified Data in Modern Plant Research

Technical Support Center

Troubleshooting Guides

Guide 1: Resolving Clinical Data Integration Failures

Problem: Clinical trial data from different sources (e.g., CTMS, EDC) fails to integrate into a unified data lake, causing delays in analysis.

Explanation: Integration failures often occur due to non-standardized data formats and inconsistent use of data standards across different systems and teams. This prevents the creation of a single source of truth [1].

Solution:

- Step 1: Audit all incoming data for compliance with CDISC standards, including SDTM and ADaM models [1].

- Step 2: Implement an AI-powered data harmonization tool to automatically cleanse, standardize, and enrich fragmented datasets [1].

- Step 3: Establish a governance-driven metadata management framework to ensure ongoing data integrity, traceability, and security [1].

Prevention:

- Adopt a unified data repository with role-based access from the start of the trial [1].

- Provide training on data standards for all research staff.

Guide 2: Addressing Poor Cross-Market Data Comparability

Problem: Inability to reliably compare performance and insights across different regional markets.

Explanation: A decentralized approach, where local markets make independent technology decisions, leads to inconsistent KPIs, divergent measurement practices, and ultimately, data that cannot be compared [2].

Solution:

- Step 1: Establish a global coordination team to define and roll out standardized KPIs and measurement practices [2].

- Step 2: Implement a centralized platform that provides a shared data foundation for all markets [2].

- Step 3: Create a "learn and reuse" process where successful innovations from "lighthouse" pilot markets are quickly scaled across other regions [2].

Prevention: Shift from a siloed, decentralized model to a globally coordinated, centralized approach for data management and analytics [2].

Frequently Asked Questions (FAQs)

Q1: What are the most common root causes of data silos in pharmaceutical R&D? Data silos persist due to several structural factors: technical integration challenges with fragmented sources and proprietary tools, a complex global value chain with poor communication between units, prolonged development cycles that disrupt continuity, and resource limitations that delay investments in modernized data infrastructure [1].

Q2: We have a global operation. How can we centralize data management without stifling local innovation? Centralization does not require a one-size-fits-all model. The key is to establish a strong global backbone comprising guidelines, governance, and coordination mechanisms. This backbone provides the necessary structure and safety, while empowering local teams to customize experiences for their regional customers. This approach balances global efficiency with local relevance [2].

Q3: What is a "linkable data infrastructure" and how does it help? A linkable data infrastructure uses privacy-protecting tokenization to connect de-identified patient records across disparate datasets. By employing a common token, it eliminates silos and enhances longitudinal insights without compromising patient privacy or HIPAA compliance. This allows for the integration of real-world data into clinical development and strengthens health economics and outcomes research [3].

Q4: What quantitative benefits can we expect from breaking down data silos? Breaking down data silos can lead to substantial financial and operational improvements. Deloitte's 2025 insights indicate that AI investments, when supported by enterprise-wide digital integration, could boost revenue by up to 11% and yield up to 12% in cost savings for pharmaceutical and life sciences organizations [1].

Table 1: Financial and Operational Impact of Data Silos and Integration

| Metric | Situation with Data Silos | Situation After Data Integration | Data Source |

|---|---|---|---|

| Drug Development Cost | Averages over $2.2 billion per successful asset [1] | Potential for significant cost reduction | [1] |

| Potential Revenue Boost | N/A | Up to 11% from AI investments supported by integration [1] | [1] |

| Potential Cost Savings | N/A | Up to 12% from AI investments supported by integration [1] | [1] |

| Regulatory Document Processing | 45 minutes per document [1] | 2 minutes per document (over 90% accuracy) [1] | [1] |

Table 2: Centralized vs. Decentralized Data Management Approach

| Aspect | Centralized Approach | Decentralized Approach |

|---|---|---|

| Speed to Insight | Faster roll-out of new analytics capabilities across markets [2] | Slowed by endless local pilots that are difficult to scale [2] |

| Market Comparability | Enabled via shared data foundation and standardized KPIs [2] | Extremely difficult due to inconsistencies [2] |

| Cost Efficiency | Substantial savings from reduced redundant investments [2] | Higher overall costs [2] |

| Innovation Scaling | Successful innovations can be quickly scaled across all markets [2] | Innovations get stuck in one region, leading to competitive disadvantage [2] |

Experimental Protocols

Protocol: Implementing a Centralized Data Lake for Clinical Trial Data

Objective: To create a single, secure source of truth for all clinical trial data, enabling seamless collaboration and faster insights for research teams.

Materials:

- Data sources (CTMS, EDC, real-world data sources)

- Cloud-native platform (e.g., AWS, Azure, GCP)

- Data standardization tools (e.g., supporting CDISC standards)

Methodology:

- Assessment: Conduct a maturity assessment to identify gaps in the current data architecture [2].

- Platform Selection: Adopt a scalable, cloud-native platform to serve as the unified data repository [1].

- Data Ingestion & Standardization: Ingest data from all relevant sources. Apply CDISC standards (SDTM, ADaM) to ensure consistent structuring [1].

- Governance & Access Control: Establish a strong data governance framework with role-based access controls to ensure data security, integrity, and compliance (e.g., with GxP, GDPR) [1] [4].

- Validation: Monitor data pipelines for integration consistency and validate data quality using predefined metrics.

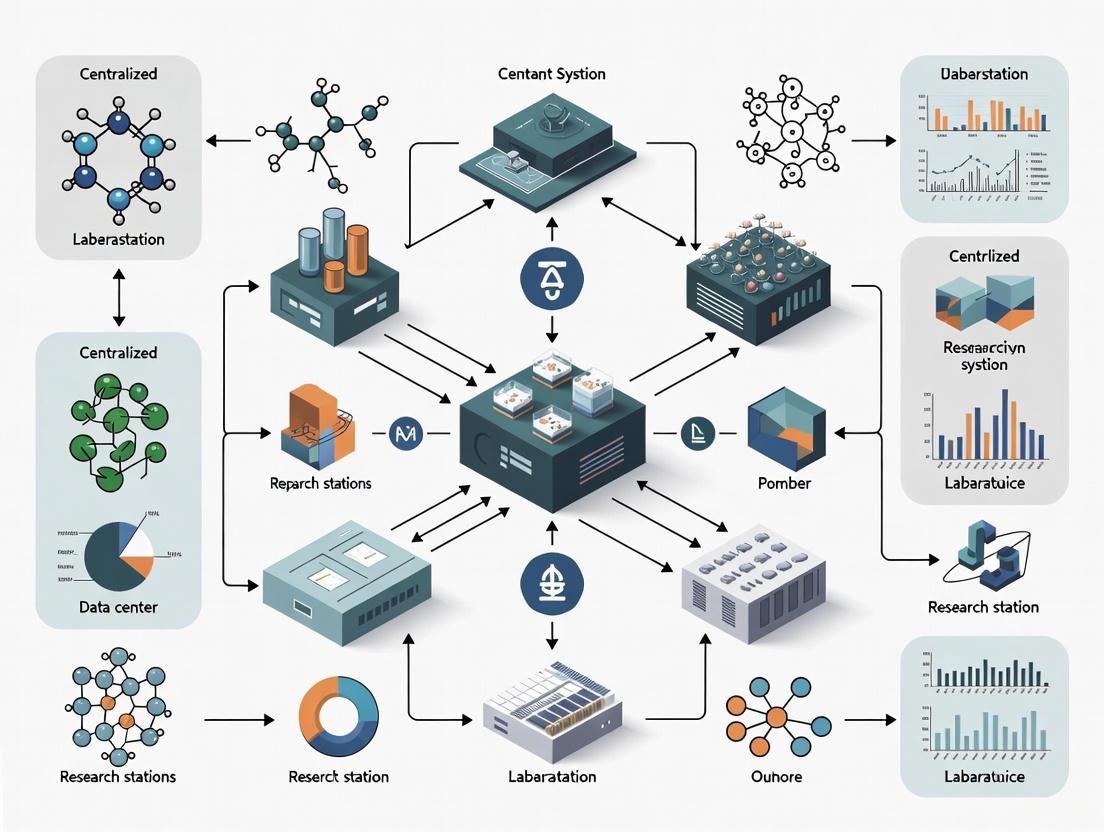

Workflow Diagrams

Diagram 1: Data Integration Pathway

Diagram 2: Centralized vs. Decentralized Data Architecture

The Scientist's Toolkit: Essential Data Management Solutions

| Tool / Standard | Function | Applicable Context |

|---|---|---|

| CDISC Standards (SDTM, ADaM) | Defines consistent structures for clinical data to ensure interoperability and regulatory compliance [1]. | Clinical trial data submission and analysis. |

| Cloud-Native Platform | Provides a scalable, secure environment (data lake) to integrate legacy and real-time datasets [1]. | Centralizing data storage across the R&D value chain. |

| AI-Powered Data Harmonization | Uses NLP and advanced analytics to automatically cleanse, standardize, and enrich fragmented datasets [1]. | Integrating disparate data streams from R&D, clinical, and regulatory operations. |

| Privacy-Preserving Tokens | Enables the linkage of de-identified patient records across datasets while maintaining HIPAA compliance [3]. | Connecting real-world data with clinical trial data for longitudinal studies. |

| Unified Data Repository | A centralized platform for storing, organizing, and analyzing data from multiple sources and formats [4]. | Creating a single source of truth for all research and clinical data. |

Technical Support Center

Troubleshooting Guides

Guide: Diagnosing Supply Chain Data Gaps

Problem: Inability to assess supply chain vulnerability due to missing or siloed data on suppliers and inventory. Diagnosis:

- Map Your Data Sources: Identify all systems holding supplier, inventory, and logistics data (e.g., ERP, supplier portals, logistics software).

- Check for Single Points of Failure: Analyze your supplier list for critical materials sourced from a single provider or region [5].

- Audit Data Completeness: Verify that key fields (e.g., supplier secondary sources, lead times, inventory levels) are populated for critical items [6]. Solution: Centralize data into a single platform. Implement automated monitoring for lead times and inventory levels to enable proactive alerts [5].

Guide: Resolving AI Model Data Quality Errors

Problem: AI/ML models for predictive supply chain analysis produce unreliable or erroneous outputs. Diagnosis:

- Validate Input Data: Check for inconsistencies, missing values, or formatting discrepancies in the data fed into the model [7].

- Review Data Integration: If using multiple data sources (e.g., EHR, wearables, supplier APIs), ensure they are properly harmonized and mapped to a standard model like CDISC SDTM [6].

- Check for Data Drift: Investigate if the statistical properties of the live input data have shifted from the data used to train the model. Solution: Implement automated data validation checks at the point of entry. Use standardized data models and conduct regular audits to maintain data quality [6] [7].

Guide: Addressing Regulatory Compliance Flags

Problem: Systems flag potential non-compliance with new data regulations during a research experiment. Diagnosis:

- Identify the Regulation: Determine which specific regulation is causing the flag (e.g., GDPR for EU participant data, HIPAA for health information) [6].

- Trace the Data: Locate all instances where the regulated data is stored, processed, or transferred within your systems.

- Review Consent Documentation: Verify that participant consent forms explicitly cover the current data processing activities [6]. Solution: Develop clear data governance policies. Collaborate with legal and compliance teams to ensure data handling meets all regional requirements where you operate [8] [7].

Frequently Asked Questions (FAQs)

Q1: Our supply chain is often disrupted. What is the first step to making it more resilient? A1: Begin by moving from a "fragile," precision-obsessed planning model to a more adaptive one. This involves understanding the impacts of uncertainty through experimentation and stress-testing your supply chain model, rather than just trying to create the most accurate single plan [9].

Q2: What are the most critical regulatory pressures to watch in 2025? A2: Key areas include growing regulatory divergence between states and countries, a complex patchwork of AI and data privacy laws, and heightened focus on cybersecurity and consumer protection where harm is "direct and tangible" [8]. Proactive monitoring is essential as these regulations evolve rapidly.

Q3: How can we start using AI when our data is messy and siloed? A3: First, invest in data centralization and governance [7]. Then, begin with targeted pilot projects in less critical functions. Most organizations are in the early stages; only about one-third have scaled AI across the enterprise. Focus on specific use cases, such as using AI to automate data processing tasks, before attempting enterprise-wide transformation [10].

Q4: We are considering nearshoring. What factors should influence our location decision? A4: Key factors include:

- Labor Costs: Compare wages across potential countries [11].

- Lead Times & Shipping Costs: Proximity to end consumers can significantly reduce both [11].

- Trade Policies: Leverage free trade agreements (e.g., USMCA) to reduce tariffs [11].

- Government Incentives: Many governments offer subsidies and tax incentives for domestic investment [11].

Q5: What is an "AI agent" and how is it different from the AI we use now? A5: Most current AI is used for discrete tasks (e.g., analysis, prediction). An AI agent is a system capable of planning and executing multi-step workflows in the real world with less human intervention (e.g., autonomously managing a service desk ticket from start to finish). While only 23% of organizations are scaling their use, they are most common in IT and knowledge management functions [10].

Table 1: AI Adoption and Impact Metrics (2024-2025)

| Metric | Value | Source / Context |

|---|---|---|

| Organizations using AI | 88% | In at least one business function [10] |

| Organizations scaling AI | ~33% | Across the enterprise [10] |

| AI High Performers | 6% | Organizations seeing significant EBIT impact from AI [10] |

| Enterprises using AI Agents | 62% | At least experimenting with AI agents [10] |

| U.S. Private AI Investment | $109.1B | In 2024 [12] |

| Top Cost-Saving Use Cases | Software Engineering, Manufacturing, IT | From individual AI use cases [10] |

Table 2: Global Supply Chain Maturity Distribution

| Supply Chain State | Description | Prevalence |

|---|---|---|

| Fragile | Loses value when exposed to uncertainty; reliant on precision-focused planning. | 63% (Majority) [9] |

| Resilient | Maintains value during disruption; uses scenario-based planning and redundancy. | ~8% (Fully resilient) [9] |

| Antifragile | Gains value amid uncertainty; employs probabilistic modeling and stress-testing. | ~6% (Fully antifragile) [9] |

Table 3: Key Regulatory Pressure Indicators for 2025

| Regulatory Area | Pressure Level & Trend | Key Focus for H2 2025 |

|---|---|---|

| Regulatory Divergence | High, Increasing | Preemption of state laws, shifts in enforcement focus [8]. |

| Trusted AI & Systems | High, Increasing | Interwoven policy on AI, data privacy, and energy infrastructure [8]. |

| Cybersecurity & Info Protection | High, Increasing | Expansion of state-level infrastructure security and data protection rules [8]. |

| Financial & Operational Resilience | Medium, Stable | Regulatory tailoring of oversight frameworks for primary financial risks [8]. |

Experimental Protocols

Protocol 1: Stress-Testing for Supply Chain Antifragility

Objective: To evaluate and enhance a supply chain's ability to not just withstand but capitalize on disruptions. Methodology:

- Model Creation: Develop a probabilistic digital model of your supply chain resource performance, aligning network design and sales and operations planning (S&OP) [9].

- Define Shock Parameters: Identify key variables to disrupt (e.g., lead times from a specific region, raw material costs, shipping capacity) [9].

- Execute Stress Tests: Run simulations that apply extreme, but plausible, disruptions to the model. Purposefully disrupt the model to assess outcomes across a wide range of uncertainty levels [9].

- Analyze Outcomes: Move beyond simple plan attainment metrics. Focus on how resource performance and value metrics (e.g., profitability, market share) respond to the shocks. The goal is to identify configurations where disruption creates relative gain [9].

Protocol 2: Implementing a Supplier Management Program

Objective: To promote transparency and mitigate risk in the facilities management (FM) supply chain. Methodology:

- Due Diligence: Establish minimum global standards for supplier selection. Evaluate potential suppliers for compliance with local regulations, financial stability, labor practices, and safety standards [5].

- Continuous Monitoring: Move beyond annual reviews. Establish KPIs and a regular reporting cadence. Use integrated technology and AI to monitor supplier financial health and identify issues proactively [5].

- Foster Accountability & Innovation: Create a culture of accountability with clear performance expectations. Engage strategic suppliers in collaborative problem-solving and innovation challenges to build a resilient, world-class supply chain [5].

Protocol 3: Data Integrity and Validation for AI Readiness

Objective: To ensure data is accurate, complete, and fit for use in AI models and advanced analytics. Methodology:

- Implement Validation at Entry: Deploy automated data validation checks at the point of entry to minimize errors. This includes range checks, format validation, and mandatory field enforcement [7].

- Standardize Data Collection: Create uniform Standard Operating Procedures (SOPs) and data dictionaries for all data sources to minimize site-to-site or system-to-system variability [6].

- Conduct Regular Audits: Perform scheduled and random audits of centralized data to identify and rectify discrepancies, inconsistencies, and missing information [7].

- Establish Data Governance: Develop a formal data governance framework that outlines data ownership, stewardship, access controls, and lifecycle management processes [7].

Visualizations

Diagram 1: Supply Chain Maturity Spectrum

Diagram 2: Centralized Data Management Workflow

Diagram 3: AI Agent Orchestration in Research

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Data Management "Reagents" for Centralized Research

| Tool / Solution | Function in the Experiment / Research Process |

|---|---|

| Electronic Data Capture (EDC) Systems | Digitizes data collection at the source, reducing manual entry errors and providing built-in validation checks for higher data quality [6]. |

| CDISC Standards (e.g., SDTM, ADaM) | Provides standardized data models for organizing and analyzing clinical and research data, ensuring interoperability and streamlining regulatory submissions [6]. |

| Data Integration Platforms | Acts as middleware to seamlessly connect disparate data sources (e.g., EHR, lab systems, wearables), converting and routing data into a unified format [6]. |

| Data Governance Framework | A formal system of decision rights and accountabilities for data-related processes, ensuring data is managed as a valuable asset according to clear policies and standards [7]. |

| AI-Powered Monitoring Tools | Uses AI and automation to provide real-time visibility into supply chain or experimental data flows, proactively identifying disruptions, anomalies, and performance issues [5]. |

The High Cost of Data Downtime and Poor Data Quality in Research

In modern plant research, data has become a primary asset. However, data downtime—periods when data is incomplete, erroneous, or otherwise unavailable—and poor data quality present significant and often underestimated costs. These issues directly compromise research integrity, delay project timelines, and waste substantial financial resources. For research facilities operating on fixed grants and tight schedules, the impact extends beyond mere inconvenience to fundamentally hinder scientific progress. This technical support center provides plant researchers with actionable strategies to diagnose, troubleshoot, and prevent these costly data management problems, thereby supporting the broader goal of implementing effective centralized data management.

Understanding the Problem: Data Downtime and Quality

What are Data Downtime and Poor Data Quality?

- Data Downtime: Any period when a dataset cannot be used for its intended research purpose due to being unavailable, incomplete, or unreliable. In a practical research context, this could mean a genomic dataset is corrupted during transfer, a shared data resource is inaccessible due to network failure, or a data pipeline processing plant phenotyping images breaks down.

- Poor Data Quality: Data that suffers from issues such as inaccuracy, incompleteness, inconsistency, or a lack of proper provenance (metadata) [13]. Examples include mislabeled plant samples, missing environmental sensor readings, inconsistent units of measurement across datasets, or image files without timestamps.

The Quantitative and Qualitative Costs

The following table summarizes the multifaceted costs associated with data-related issues in research, synthesizing insights from manufacturing downtime and research data management principles [14] [15].

Table 1: The Costs of Data Downtime and Poor Data Quality

| Cost Category | Specific Impact on Research | Estimated Financial / Resource Drain |

|---|---|---|

| Direct Financial Loss | - Wasted reagents and materials used in experiments based on faulty data.- Grant money spent on salaries and resources during non-productive periods. | Studies in manufacturing show unplanned downtime can cost millions per hour; while harder to quantify in labs, the principle of idle resources applies directly [15]. |

| Lost Time & Productivity | - Researchers' time spent identifying, diagnosing, and correcting data errors instead of performing analysis [13].- Delayed publication timelines and missed grant application deadlines. | A single downtime incident can result in weeks of lost productivity. In manufacturing, the average facility faces 800 hours of unplanned downtime annually [15]. |

| Compromised Research Integrity | - Inability to reproduce or replicate study results, undermining scientific validity [13].- Drawing incorrect conclusions from low-quality or incomplete data, leading to retractions or erroneous follow-up studies. | The foundational principle of scientific reproducibility is compromised, which is difficult to quantify but devastating to a research program's credibility. |

| Inefficient Resource Use | - Redundant data collection when original data is lost or unusable [16].- High costs of data storage for large, redundant, or low-value datasets. | One study found that using high-quality data can achieve the same model performance with significantly less data, saving on collection, storage, and processing costs [16]. |

| Reputational Damage | - Loss of trust from collaborators and funding bodies.- Difficulty attracting talented researchers to the lab. | In industry, this can lead to a loss of customer trust and damage to brand reputation; in academia, it translates to a weaker scientific standing [15]. |

Technical Support Center: FAQs & Troubleshooting

Frequently Asked Questions (FAQs)

Q1: Our team often ends up with inconsistent data formats (e.g., for plant phenotype measurements). How can we prevent this? A1: Implement a Standardized Data Collection Protocol.

- Action: Create and enforce the use of a lab-wide data collection template for specific experiments. This should define standard file formats (e.g., CSV, not XLSX), controlled vocabularies for sample states (e.g., "flowering," "senescence"), and required units (e.g., always "μmol m⁻² s⁻¹" for photosynthesis).

- Centralized Management Benefit: A centralized data management system can host these templates and protocols, ensuring every researcher uses the same standard from the start [13].

Q2: We've lost critical data from a plant growth experiment due to a hard drive failure. How can we avoid this? A2: Adhere to the 3-2-1 Rule of Data Backup.

- Action: Maintain at least 3 total copies of your data, stored on 2 different types of media (e.g., a local server and cloud storage), with 1 copy kept off-site (e.g., an institutional cloud service).

- Centralized Management Benefit: A centralized system often includes automated, regular backups to resilient and geographically redundant storage, protecting against local hardware failures, theft, or natural disasters [13].

Q3: A collaborator cannot understand or reuse our transcriptomics dataset from six months ago. What went wrong? A3: This is a failure of Provenance and Metadata Documentation.

- Action: For every dataset, create a detailed "README" file or use a metadata standard that describes the experimental design, sample identifiers, data processing steps, software versions, and any data transformations applied.

- Centralized Management Benefit: Centralized platforms often force metadata entry upon data upload and provide structured fields based on community standards (e.g., MIAME for transcriptomics), making data inherently more understandable and reusable [17].

Q4: We have a large image dataset for pest identification, but training a model is taking too long and performing poorly. Is more data the only solution? A4: Not necessarily. Focus on Data Quality over Quantity.

- Action: Before collecting more data, assess the quality of your existing set. A study on crop pest recognition found that a selected subset of high-quality, information-rich data could achieve the same performance as a much larger, unfiltered dataset [16]. Techniques like the Embedding Range Judgment (ERJ) method can help identify the most valuable samples.

- Centralized Management Benefit: A centralized system can facilitate the application of quality metrics and filters across large datasets, helping researchers curate high-quality subsets for analysis, saving storage and computational costs [16].

Troubleshooting Guides

Problem: Inaccessible or "Lost" Data File This is a classic case of data downtime where a required dataset is not available for analysis.

- Step 1: Check Local and Network Drives. Verify the file hasn't been moved to a different local folder. If using a network drive, confirm connectivity and permissions.

- Step 2: Consult Lab Data Inventory. Check the lab's central data log or catalog (if one exists) for the file's documented location and unique identifier.

- Step 3: Restore from Backup.

- If using a centralized system with versioning: Access the system's backup or previous version to restore the file.

- If using manual backups: Locate your most recent backup on an external drive or cloud service.

- Step 4: Verify Data Integrity. Once restored, check that the file opens correctly and its contents are intact.

- Prevention Strategy: Implement a centralized data repository with a logical, enforced folder structure and automated backup policies. This eliminates the "where is the file?" problem and provides a recovery path [13].

Problem: Inconsistent Results Upon Data Reanalysis This suggests underlying data quality issues, such as undocumented processing steps or version mix-ups.

- Step 1: Check Data Provenance. Review the metadata and processing logs associated with the dataset. What were the exact software parameters and scripts used initially?

- Step 2: Verify Data Version. Ensure you are working on the correct version of the dataset. A centralized system with version control (like Git for code) is ideal for this.

- Step 3: Reproduce the Data Processing Pipeline. Use the documented workflow (e.g., a Snakemake or Nextflow script) to reprocess the raw data from scratch and see if the results match.

- Step 4: Audit for Data Contamination. Check for and remove any accidental duplicate entries or mislabeled samples that could skew the analysis.

- Prevention Strategy: Use a electronic lab notebook (ELN) to document analyses and manage data with a system that tracks versions and provenance automatically. This makes the entire research process more reproducible [13] [17].

Experimental Protocols for Ensuring Data Quality

Protocol: Data Collection and Annotation for Plant Imaging

This protocol ensures high-quality, reusable data from plant phenotyping or pest imaging experiments [16].

- Equipment Setup:

- Calibrate the imaging system (camera or scanner) according to manufacturer specifications.

- Use a standardized color checker card in the first image of a session to ensure color fidelity.

- Maintain consistent lighting conditions across all imaging sessions.

- Image Capture:

- Use a consistent naming convention:

[PlantID]_[Date(yyyymmdd)]_[ViewAngle].jpg(e.g.,PlantA_20241121_top.jpg). - Capture images at a standardized resolution and scale bar.

- Use a consistent naming convention:

- Metadata Annotation:

- At capture, record in a spreadsheet or dedicated software: Plant ID, Genotype, Treatment, Time Point, Photographer, and any anomalies.

- Initial Quality Control (QC):

- Immediately after capture, visually inspect a subset of images for focus, lighting, and correct labeling.

- Re-take any images that do not meet quality standards.

- Data Upload and Storage:

- Upload raw images and their associated metadata to the centralized data management platform promptly after QC.

Protocol: The Embedding Range Judgment (ERJ) Method for Data Selection

This methodology, derived from research, helps select the most informative data samples to maximize model performance without requiring massive datasets [16]. The workflow is designed to be implemented in a computational environment like Python.

ERJ Method Workflow

Objective: To iteratively select the most valuable samples from a large pool of unlabeled (or poorly labeled) data to improve a machine learning model efficiently.

Inputs:

- Base Data (S): A small, trusted set of labeled data (e.g., 80 samples per class).

- Pool Data (S): A larger set of data (labeled or unlabeled) from which to select (e.g., 720 samples per class).

Procedure:

- Initial Model Training: Fine-tune a pre-trained feature extractor (e.g., a convolutional neural network) on the Base Data [16].

- Establish Feature Baseline: Pass the Base Data through the trained feature extractor to obtain their feature embeddings (high-dimensional vectors). Calculate the range of these embeddings for each dimension [16].

- Evaluate Pool Data: Pass the Pool Data through the same feature extractor to get their embeddings.

- Information Value Judgment: For each sample in the Pool Data, check if its embedding has values that fall outside the established range of the Base Data in several dimensions. These "out-of-range" samples are considered to have high information value as they represent novelty to the current model [16].

- Iterative Selection: Select a batch of the highest-value samples (e.g., 40 per class), add them to the Base Data, and repeat steps 1-4 until the desired performance or data budget is reached [16].

- Final Training: Train the final production model on the final, enriched Base Data.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Digital & Physical Tools for Data-Management in Plant Research

| Item | Function in Research | Role in Data Management & Quality |

|---|---|---|

| Electronic Lab Notebook (ELN) | Digital replacement for paper notebooks to record experiments, observations, and procedures. | Provides the foundational layer for data provenance by directly linking raw data files to experimental context and protocols [13]. |

| Centralized Data Repository | A dedicated server or cloud-based system (e.g., based on Dataverse, S3) for storing all research data. | Prevents data silos and loss by providing a single source of truth. Enforces access controls, backup policies, and often metadata standards [13]. |

| Metadata Standards | Structured schemas (e.g., MIAPPE for plant phenotyping) defining which descriptive information must be recorded. | Makes data findable, understandable, and reusable by others and your future self, directly supporting FAIR principles [13] [17]. |

| Version Control System (e.g., Git) | A system to track changes in code and sometimes small data files over time. | Essential for reproducibility of data analysis; allows you to revert to previous states and collaborate on code without conflict. |

| Automated Data Pipeline Tools (e.g., Nextflow, Snakemake) | Frameworks for creating scalable and reproducible data workflows. | Reduces human error in data processing by automating multi-step analyses, ensuring the same process is applied to every dataset [13]. |

| Reference Materials & Controls | Physical standards (e.g., control plant lines, chemical standards) used in experiments. | Generates reliable and comparable quantitative data across different experimental batches and time, improving data quality at the source. |

Defining Centralized Data Management and Its Core Objectives for Research Facilities

Frequently Asked Questions

What is centralized data management in a research context? Centralized data management refers to the consolidation of data from multiple, disparate sources into a single, unified repository, such as a data warehouse or data lake [18]. In a research facility, this means integrating data from various instruments, experiments, and lab systems to create a single source of truth. The core objective is to make data more accessible, manageable, and reliable for analysis, thereby supporting reproducible and collaborative science [19] [20].

Why is a Data Management Plan (DMP) critical for a research facility? A Data Management Plan (DMP) is a formal document that outlines the procedures for handling data throughout and after the research process [21]. For research facilities, it is often a mandatory component of funding proposals [22]. A DMP is crucial because it ensures data is collected, documented, and stored in a way that preserves its integrity, enables sharing, and complies with regulatory and funder requirements. Failure to adhere to an approved DMP can negatively impact future funding opportunities [22].

Our plant science research involves complex genotype-by-environment (GxE) interactions. How can centralized data help? Centralized data management is particularly vital for studying complex interactions like GxE and GxExM (genotype-by-environment-by-management) [23]. By integrating large, multi-dimensional datasets from genomics, phenomics, proteomics, and environmental sensors into a single repository, researchers can more effectively use machine learning and other AI techniques to uncover correlations and build predictive models that would be difficult to discover with fragmented data [23] [17].

What is a "single source of truth" and why does it matter? A "single source of truth" is a centralized data repository that provides a consistent, accurate, and reliable view of key organizational or research data [24] [18]. It matters because it eliminates data silos and conflicting versions of data across different departments or lab groups. This ensures that all researchers are basing their analyses and decisions on the same consistent information, which enhances data integrity and trust in research outcomes [19] [20].

How does centralized management improve data security? Centralizing data allows for the implementation of robust, consistent security measures across the entire dataset. Instead of managing security across numerous fragmented systems, a centralized approach enables stronger access controls, encryption, and audit trails on a single infrastructure, simplifying compliance with regulations like GDPR or HIPAA [21] [18].

Troubleshooting Common Data Management Challenges

| Challenge | Root Cause | Solution & Best Practices |

|---|---|---|

| Data Silos & Inconsistent Formats | Different lab groups or instruments using isolated systems and non-standardized data formats [23]. | Implement consistent schemas and field names [25]. Establish and enforce data standards across the facility. Use integration tools (ETL/ELT) to automatically transform and harmonize data from diverse sources into a unified schema upon ingestion [18]. |

| Poor Data Quality & Integrity | Manual data entry errors, lack of validation rules, and no centralized quality control process [21]. | Establish continuous quality control [25]. Implement automated validation checks at the point of data entry (e.g., within electronic Case Report Forms) [21]. Perform regular, automated audits of the central repository to check for internal and external consistency [25]. |

| Difficulty Tracking Data Provenance | Lack of versioning and auditing, making it hard to trace how data was generated or modified [25]. | Enforce versioning, access control, and auditing [25]. Use a system that automatically tracks changes to datasets (audit trails), records who made the change, and allows you to revert to previous versions if necessary. This is critical for reproducibility. |

| Resistance to Adoption & Collaboration | Organizational culture of data ownership ("my data") and lack of training on new centralized systems [19]. | Promote active collaboration and training [25]. Involve researchers early in the design of the data system. Provide comprehensive training and demonstrate the benefits of a data-driven culture. Foster an environment that values "our data" to break down silos [18]. |

| Integrating Diverse Data Types | Challenges in combining structured (e.g., spreadsheets), semi-structured (e.g., JSON), and unstructured (e.g., images, notes) data [23] [17]. | Select the appropriate storage solution. Use a data warehouse for structured, analytics-ready data and a data lake to store raw, unstructured data like plant phenotyping images or genomic sequences. This hybrid approach accommodates diverse data needs [18] [20]. |

Experimental Protocols for Data Management

Protocol 1: Implementing a Phased Data Centralization Strategy

Adopting a centralized system can be daunting. An incremental, phased approach is recommended to lower stress and allow for process adjustments, especially when dealing with limited budgets and resources [26].

- Objective: To successfully centralize data management by starting with a manageable scope and expanding integration over time.

- Methodology:

- Identify & Set Goals: Define specific, attainable short-term goals for data use. Identify all member touchpoints and where data resides within the organization [26] [24].

- Prioritize Data: Conduct a data audit. Focus on the information most critical to your primary research objectives, such as specific phenotyping or genomic data, rather than trying to centralize everything at once [26] [23].

- Pilot Integration: Begin by integrating just two or three key data sources (e.g., linking your lab information management system (LIMS) with your experimental electronic notebook). This foundation provides a launchpad for further integration [26].

- Iterate and Expand: Use reports and dashboards from the initial phase to demonstrate success and justify further investment. Gradually integrate more data sources based on research priorities [26] [25].

Protocol 2: Establishing a Data Governance Framework

A data governance framework provides the policies and procedures necessary to maintain data integrity, security, and usability in a centralized system [19].

- Objective: To ensure centralized data remains accurate, consistent, and secure throughout its lifecycle.

- Methodology:

- Define Policies: Establish clear policies for data access, usage, and sharing. Define who can access what data and under which circumstances [19].

- Ensure Data Integrity: Implement validation rules, error-checking routines, and scheduled audits to identify and correct discrepancies promptly [19].

- Protect Data Privacy: Apply security measures like role-based access control, encryption, and data anonymization to protect sensitive research information and comply with regulations [21] [18].

- Assign Ownership: Designate data stewards responsible for the quality and management of specific datasets within the repository [19].

Centralized Data Management Workflow

The following diagram illustrates the logical flow and components of a centralized data management system in a research facility.

The Scientist's Toolkit: Essential Solutions for Data Management

| Tool Category | Examples | Function in Research |

|---|---|---|

| Data Storage & Repositories | Data Warehouses (e.g., Snowflake, BigQuery), Data Lakes (e.g., Amazon S3) [26] [18] | Provides a centralized, scalable repository for structured (warehouse) and raw/unstructured (lake) research data, enabling complex queries and analysis [18] [20]. |

| Data Integration & Pipelines | ETL/ELT Tools (e.g., Fivetran), RudderStack [26] [18] | Automates the process of Extracting data from source systems (e.g., instruments), Transforming it into a consistent format, and Loading it into the central repository [26]. |

| Data Governance & Security | Access Control Systems, Encryption Tools, Audit Trail Features [21] | Ensures data integrity, security, and compliance by managing user permissions, protecting sensitive data, and tracking all data access and modifications [25] [19]. |

| Analytics & Visualization | Business Intelligence (BI) Platforms (e.g., Power BI, Tableau) [26] [18] | Allows researchers to explore, visualize, and create dashboards from centralized data, facilitating insight generation and data-driven decision-making without deep technical expertise [26] [25]. |

| Electronic Data Capture (EDC) | Clinical Data Management Systems (CDMS) like OpenClinica, Castor EDC [21] | Provides structured digital forms (eCRFs) for consistent and validated data collection in observational studies or clinical trials, directly feeding into the central repository [21]. |

Building Your Data Foundation: Architectures, Tools, and Implementation Strategies

For plant research facilities, the choice of a data architecture is a foundational decision that shapes how you store, process, and derive insights from complex experimental data. The challenge of integrating diverse data types—from genomic sequences and transcriptomics to spectral imaging and environmental sensor readings—requires a robust data management strategy. This guide provides a technical support framework to help you navigate the selection and troubleshooting of three core architectures: the traditional Data Warehouse, the flexible Data Lake, and the modern Data Lakehouse.

FAQs: Architectural Choices and Common Challenges

What are the core differences between a Data Warehouse, a Data Lake, and a Data Lakehouse?

The primary differences lie in their data structure, user focus, and core use cases. The table below provides a structured comparison to help you identify the best fit for your research needs [27].

| Feature | Data Warehouse | Data Lake | Data Lakehouse |

|---|---|---|---|

| Data Type | Structured | All (Structured, Semi-structured, Unstructured) | All (Structured, Semi-structured, Unstructured) |

| Schema | Schema-on-write (predefined) | Schema-on-read (flexible) | Schema-on-read with enforced schema-on-write capabilities |

| Primary Users | Business Analysts, BI Professionals | Data Scientists, ML Engineers, Data Engineers | All data professionals (BI, ML, Data Science, Data Engineering) |

| Use Cases | BI, Reporting, Historical Analysis | ML, AI, Exploratory Analytics, Raw Data Storage | BI, ML, AI, Real-time Analytics, Data Engineering, Reporting |

| Cost | High (proprietary software, structured storage) | Low (cheap object storage) | Moderate (cheap object storage with added management layer) |

Our facility deals with massive, unstructured data like plant imagery. Is a Data Lake our only option?

While a Data Lake is an excellent repository for raw, unstructured data like plant imagery [27], a Data Lakehouse may be a superior long-term solution. A Lakehouse allows you to store the raw images cost-effectively while also providing the data management and transaction support necessary for reproducible analysis [27] [28].

For example, in quantifying complex fruit colour patterning, researchers store high-resolution images in a system that allows for both the initial data-driven colour summarization and subsequent analytical queries [29]. A Lakehouse architecture supports this entire workflow in one platform, preventing the data "swamp" issue common in lakes and enabling both scientific discovery and standardized reporting.

We need to integrate heterogeneous data types. How can we structure this process?

Integrating multi-omics data (genomics, transcriptomics, proteomics, metabolomics) is a common challenge in plant biology [17]. A structured process is key. The workflow below outlines a robust methodology for data integration, from raw data to actionable biological models.

Experimental Protocol: Data Integration for Genome-Scale Modeling [17]

- Data Acquisition: Collect multi-omics data from high-throughput techniques (e.g., HTS for genomics, mass spectrometry for metabolomics).

- Constraint-Based Modeling: Reconstruct a genome-scale metabolic network using annotated genome information. This network predicts functional cellular structure.

- Data Integration: Use transcriptomic and proteomic data to constrain the model's flux predictions. This involves activating or deactivating metabolic reactions in the model based on experimental observations.

- Validation and Iteration: Compare the model's predictions (e.g., metabolite levels) with independent experimental metabolomics data. Manually and algorithmically curate the model to improve accuracy and resolve network gaps.

- Insight Generation: Apply the validated model to investigate metabolic regulation, such as the effect of variable nitrogen supply on biomass formation in maize.

How do we prevent our Data Lake from turning into an unmanageable "data swamp"?

A "data swamp" occurs when a data lake lacks governance, leading to poor data quality and reliability [27]. Prevention requires a combination of technology and process:

- Implement a Transactional Layer: Use open table formats like Apache Iceberg, Delta Lake, or Apache Hudi on top of your object storage. These technologies provide ACID transactions, ensuring data consistency and enabling features like time travel for data versioning [27] [28].

- Enforce Schema Enforcement and Evolution: While allowing for flexibility, enforce schemas when data is ready for production use to guarantee data quality. These formats allow schemas to evolve safely without breaking pipelines [27].

- Maintain a Data Catalog: Create a centralized catalog that documents available datasets, their lineage, and ownership. This is crucial for discoverability and governance, especially in a collaborative research environment.

What are the key technology components of a modern Lakehouse?

A Lakehouse is built on several key technological layers that work together [27] [28]:

| Architectural Layer | Key Technologies & Functions |

|---|---|

| Storage Layer | Cloud Object Storage (e.g., Amazon S3): Low-cost, durable storage for all data types in open formats like Parquet. |

| Transactional Metadata Layer | Open Table Formats (e.g., Apache Iceberg, Delta Lake): Provides ACID transactions, time travel, and schema enforcement, transforming storage into a reliable, database-like system. |

| Processing & Analytics Layer | Query Engines (e.g., Spark, Trino): Execute SQL queries at high speed. APIs (e.g., for Python, R): Enable direct data access for ML libraries like TensorFlow and scikit-learn. |

The Scientist's Toolkit: Essential Reagents & Materials for Plant Data Management

This table details key "research reagents" – the core technologies and tools required for building and operating a modern data architecture in a plant research context.

| Item | Function / Explanation |

|---|---|

| Cloud Object Storage | Provides low-cost, scalable, and durable storage for massive datasets (e.g., raw genomic sequences, thousands of plant images). |

| Apache Iceberg / Delta Lake | Open table formats that act as a "transactional layer," bringing database reliability (ACID compliance) to the data lake and preventing data swamps. |

| Apache Spark | A powerful data processing engine for large-scale ETL/ELT tasks, capable of handling both batch and streaming data. |

| Jupyter Notebooks | An interactive development environment for exploratory data analysis, prototyping machine learning models, and visualizing results. |

| Plant Ontologies | Standardized, controlled vocabularies (e.g., Plant Ontology UM) to describe plant structures and growth stages, ensuring data consistency and integration across studies [30]. |

Data Visualization Guide: Effectively Communicating Plant Data

Presenting data in a visual form helps convey deeper meaning and encourages knowledge inference [30]. Follow these best practices for color use in your visualizations [31]:

- Create Associations: Use colors to trigger associations (e.g., a plant's flag colors for country-specific data, green for growth-related metrics).

- Show Continuous Data: Use a single color in a gradient to communicate amounts of a single metric over time.

- Show Contrast: Use contrasting colors to differentiate between two distinct categories or metrics.

- Ensure Accessibility: Avoid colors that are not easily distinguishable. Use a limited palette (7 or fewer colors) and be mindful of color vision deficiencies. Use sufficient contrast between text and background colors.

FAQs: Data Integration and Management Platforms

ELT Platforms

Q1: What is the core difference between ETL and ELT, and why does it matter for pharma research?

ETL (Extract, Transform, Load) transforms data before loading it into a target system, which can be time-consuming and resource-intensive for large datasets. ELT (Extract, Load, Transform) loads raw data directly into the target system (like a cloud data warehouse) and performs transformations there. ELT is generally faster for data ingestion and leverages the power of modern data warehouses, making it suitable for the vast and varied data generated in pharma R&D [32].

Q2: When should a research facility choose a real-time operational sync tool over an analytics-focused ELT tool?

Your choice should be driven by the desired outcome. Use analytics-focused ELT tools (like Fivetran or Airbyte) when the goal is to move data from various sources into a central data warehouse or lake for dashboards, modeling, and BI/AI features. Choose a real-time operational sync tool (like Stacksync) when you need to maintain sub-second, bi-directional data consistency between live operational systems, such as ensuring a CRM, ERP, and operational database are instantly and consistently updated [33].

Q3: What are the key security and compliance features to look for in an ELT platform for handling sensitive research data?

At a minimum, require platforms to have SOC 2 Type II and ISO 27001 certifications. For workloads involving patient data (PII), options for GDPR and HIPAA compliance are critical. Also look for features that support network isolation, such as VPC (Virtual Private Cloud) and Private Link, to enhance data security, along with comprehensive, audited logs for tracking data access and changes [33].

Master Data Management (MDM) Solutions

Q4: What is a "golden record" in MDM, and why is it important for a plant research facility?

In MDM, a "golden record" is a single, trusted view of a master data entity (like a specific chemical compound, plant specimen, or supplier) created by resolving inconsistencies across multiple source systems. It is constructed using survivorship rules that determine the most accurate and up-to-date information from conflicting sources. For a research facility, this ensures that all scientists and systems are using the same definitive data, which is crucial for research reproducibility, supply chain integrity, and reliable reporting [34].

Q5: How are modern MDM solutions leveraging Artificial Intelligence (AI)?

MDM vendors are increasingly adopting AI in several ways. Machine learning (ML) has long been used to improve the accuracy of merging and matching candidate master data records. Generative AI is now being used for tasks like creating product descriptions and automating the tagging of new data attributes. Furthermore, Natural Language Processing (NLP) can open up master data hubs to more intuitive, query-based interrogation by business users and researchers [34].

Troubleshooting Guides

Issue 1: Data Inconsistency Between Operational Systems (e.g., CRM and ERP)

Problem: Changes made in one live application (e.g., updating a specimen source in a CRM) are not reflected accurately or quickly in another connected system (e.g., the ERP), leading to operational errors.

Diagnosis: This is typically a failure of operational data synchronization, not an analytics problem. Analytics-first ELT tools are designed for one-way data flows to a warehouse and are not built for stateful, bi-directional sync between live apps.

Solution:

- Implement a Real-Time Bi-Directional Sync Platform: Use a tool specifically engineered for operational synchronization, such as Stacksync [33].

- Configure Conflict Resolution Rules: Within the sync platform, define rules to automatically resolve data conflicts. For example, you might set a rule that the most recent update to a "plant harvest date" always takes precedence.

- Validate with a Pilot: Connect two critical systems (e.g., your specimen management system and your lab inventory database) and configure sync for one key data object. Monitor for sub-second latency and successful conflict resolution before rolling out more broadly [33].

Issue 2: Poor Data Quality and Reliability in the Central Data Warehouse

Problem: Data loaded into the warehouse is often incomplete, inaccurate, or fails to meet quality checks, undermining trust in analytics and AI models.

Diagnosis: This can stem from a lack of automated data quality checks, insufficient transformation logic, or silent failures in data pipelines.

Solution:

- Adopt a Transformation Tool like dbt: Use dbt to standardize data transformation within your warehouse using SQL. It incorporates software engineering best practices like version control (via Git), automated testing, and comprehensive documentation [35].

- Implement Data Observability: Integrate a data observability platform like Monte Carlo. It uses machine learning to automatically monitor data pipelines and detect anomalies in freshness, volume, or schema, alerting your team to issues before they impact business decisions [35].

- Establish Data Governance: Use a modern data catalog like Atlan to automate data discovery, document business context, and track data lineage. This helps stakeholders understand data provenance and usage, improving overall trust and accountability [35].

Platform and Tool Comparison

Comparison of Leading ELT and Data Integration Platforms

The following table compares key platforms based on architecture, core strengths, and ideal use cases within pharma.

| Platform | Type / Architecture | Core Strengths & Features | Ideal Pharma Use Case |

|---|---|---|---|

| Airbyte [32] [35] | Open-source ELT | 600+ connectors, flexible custom connector development (CDK), strong community. | Integrating a wide array of unique or proprietary data sources (e.g., lab equipment outputs, specialized assays). |

| Fivetran [33] | Managed ELT | Fully-managed, reliable service with 500+ connectors; handles schema changes and automation. | Reliably moving data from common SaaS applications and databases to a central warehouse for analytics with minimal maintenance. |

| Estuary [33] [32] | Real-time ELT/ETL/CDC | Combines ELT with real-time Change Data Capture (CDC); low-latency data movement. | Streaming real-time data from operational systems (e.g., continuous manufacturing process data) for immediate analysis. |

| Stacksync [33] | Real-time Operational Sync | Bi-directional sync with conflict resolution; sub-second latency; stateful engine. | Keeping live systems (e.g., CRM, ERP, clinical databases) consistent in real-time for operational integrity. |

| Informatica [33] [34] | Enterprise ETL/iPaaS/MDM | Highly scalable and robust; supports complex data governance and multidomain MDM with AI (CLAIRE). | Large-scale, complex data integration and mastering needs, especially in large enterprises with stringent governance. |

| dbt [35] | Data Transformation | SQL-based transformation; version control, testing, and documentation; leverages warehouse compute. | Standardizing and documenting all data transformation logic for research data models in the warehouse (the "T" in ELT). |

Comparison of Selected Master Data Management (MDM) Vendors

This table outlines a selection of prominent MDM vendors, highlighting their specialties which can guide selection for specific research needs.

| Vendor | Description & Specialization | Relevance to Pharma Research |

|---|---|---|

| Informatica [34] | Multidomain MDM SaaS with AI (CLAIRE); pre-configured 360 applications for customer, product, and supplier data. | Managing master data for research materials, lab equipment, and supplier information across domains. |

| Profisee [34] | Cloud-native, multidomain MDM that is highly integrated with the Microsoft data estate (e.g., Purview). | Ideal for facilities already heavily invested in the Microsoft Azure and Purview ecosystem. |

| Reltio [34] | AI-powered data unification and management SaaS; strong presence in Life Sciences, Healthcare, and other industries. | Unifying complex research entity data (e.g., compounds, targets, patient-derived data) with AI. |

| Semarchy [34] | Intelligent Data Hub focused on multi-domain MDM with integrated governance, quality, and catalog. | Managing master data with a strong emphasis on data quality and governance workflows from the start. |

| Ataccama [34] | Unified data management platform offering integrated data quality, governance, and MDM in a single platform. | A consolidated approach to improving and mastering data without managing multiple point solutions. |

Visualizing the Modern Pharma Data Stack

Modern Pharma R&D Data Architecture

The following diagram illustrates the four-layer architecture of a modern data stack for pharmaceutical R&D, showing how data flows from source systems to actionable insights.

ELT & Data Integration Logical Workflow

This workflow details the process of moving data from source systems to a centralized repository and then to consuming applications.

The Scientist's Toolkit: Essential Data Management Solutions

The following table details key categories of tools and platforms that form the essential "research reagent solutions" for building a modern data stack in a pharmaceutical research environment.

| Tool Category | Function & Purpose | Example Solutions |

|---|---|---|

| Data Ingestion (ELT) | Extracts data from source systems and loads it into a central data repository. The first critical step in data consolidation. | Airbyte, Fivetran, Estuary [32] [35] |

| Data Storage / Warehouse | Provides a scalable, centralized repository for storing and analyzing structured and unstructured data. | Snowflake, Amazon Redshift, Google BigQuery [35] |

| Data Transformation | Cleans, enriches, and models raw data into analysis-ready tables within the warehouse. | dbt [35] |

| Master Data Management (MDM) | Creates and manages a single, trusted "golden record" for key entities (e.g., materials, specimens, suppliers). | Informatica MDM, Reltio, Profisee [34] |

| Data Observability | Provides monitoring and alerting to ensure data health, quality, and pipeline reliability. | Monte Carlo [35] |

| Data Governance & Catalog | Enables data discovery, lineage tracking, and policy management to ensure data is findable and compliant. | Atlan [35] |

| Business Intelligence (BI) | Allows researchers and analysts to explore data and build dashboards for data-driven decision-making. | Looker, Tableau [35] |

Establishing a Scalable Data Governance and Stewardship Framework

Modern plant research facilities generate vast amounts of complex data, from genomic sequences and phenotyping data to environmental sensor readings. Managing this data effectively requires a structured approach to ensure it remains findable, accessible, interoperable, and reusable (FAIR). A scalable data governance and stewardship framework provides the foundation for this management, establishing policies, roles, and responsibilities. For a multi-plant research facility, centralizing this framework is crucial for breaking down data silos, enabling cross-site collaboration, and maximizing the return on research investments [36] [37]. This technical support center addresses the specific implementation and troubleshooting challenges researchers and data professionals face when establishing such a framework.

Core Concepts: Governance vs. Stewardship

Before addressing specific issues, it is essential to understand the distinction between data governance and data stewardship, as they are complementary but distinct functions [37].

- Data Governance refers to the establishment of high-level policies, standards, and strategies for managing data assets. It defines the "what" and "why" – what rules must be followed and why they are important for achieving organizational goals [36] [37].

- Data Stewardship involves the practical implementation of these policies. Data stewards are responsible for the hands-on tasks that ensure data quality, integrity, and accessibility, answering the question of "how" the governance rules are executed day-to-day [38] [37].

The following diagram illustrates the relationship between these concepts and their overarching goal of enabling FAIR data:

Frequently Asked Questions (FAQs) and Troubleshooting Guides

FAQ 1: Roles and Responsibilities

Q: In our research institute, who should be responsible for data stewardship? We do not have dedicated staff for this role.

A: The role of a data steward can be fulfilled by individuals with various primary job functions. It is often the "tech-savvy" researcher, bioinformatician, or a senior lab member who takes on these responsibilities informally [38]. For a formal framework, we recommend:

- Assign a Lead Data Steward: Designate a principal investigator or a senior scientist as the lead data steward to oversee the framework.

- Define Domain Stewards: Appoint data stewards for specific domains (e.g., genomics, phenomics, metabolomics) who understand the data's context and metadata requirements.

- Seek Community Support: Leverage support from organizations like DataPLANT, which offer helpdesks and expert guidance for research data management [39].

Troubleshooting Guide: Researchers are resistant to adopting new data management responsibilities.

- Problem: Lack of buy-in from research staff who view data management as an administrative burden.

- Solution:

- Articulate the Benefit: Clearly explain how good data stewardship saves time during manuscript preparation, facilitates peer review, and enables data reuse for future projects.

- Integrate with Workflows: Embed data management tasks directly into existing experimental workflows rather than creating parallel processes.

- Secure Formal Support: Advocate for institutional recognition and support for data stewardship activities, such as including these efforts in performance evaluations or securing funding for dedicated data steward positions [38].

FAQ 2: Implementing FAIR Principles

Q: We want to make our plant phenomics data FAIR, but the process seems complex. Where do we start?

A: Start by focusing on metadata management and persistence [40] [37].

- Use Community Standards: Adopt metadata standards specific to plant phenomics, which can be found on resources like FAIRsharing.org [37].

- Assign Persistent Identifiers: Use identifiers like Digital Object Identifiers (DOIs) for your datasets when you deposit them in a repository.

- Select an Appropriate Repository: Deposit data in a recognized repository. For plant sciences, consider specialized repositories or general-purpose ones like Zenodo or the Open Science Framework (OSF) [37].

Troubleshooting Guide: Our legacy datasets are not FAIR. How can we "FAIRify" them?

- Problem: Historical data lacks sufficient metadata, standard formats, or clear licensing information.

- Solution:

- Inventory and Prioritize: Identify high-value legacy datasets for FAIRification.

- Create Data Dictionaries: Document the meaning, format, and source of each data column.

- Use Tools like ADataViewer: For specific data types, tools like the ADataViewer for Alzheimer's disease demonstrate how to establish interoperability at the variable level. Similar models can be developed for plant data [37].

- Plan for the Future: Use the lessons learned from retrofitting old data to improve the management of new data being generated.

FAQ 3: Tooling and Infrastructure

Q: What tools and infrastructure are needed to support data governance in a centralized, multi-plant research facility?

A: A centralized architecture is key. This can be inspired by systems like the TDM Multi Plant Management software, which uses a central database while allowing individual plants or research groups controlled views and access relevant to their work [41]. Essential tools include:

- Centralized Data Catalog: Provides a single point of discovery for all data assets.

- Metadata Management Tools: Help create, store, and manage rich metadata.

- Repository Software: Platforms like e!DAL can be used to store, share, and publish research data [40].

Troubleshooting Guide: Data is siloed across different research groups and locations.

- Problem: Inability to access or integrate data from different teams, leading to duplicated efforts and incomplete analyses.

- Solution:

- Implement a Centralized Portal: Establish a centralized data portal based on a unified architecture, similar to the vegetation monitoring app used by the National Estuarine Research Reserve System, which provides a single access point for data from multiple reserves [42].

- Enforce Data Standards: Mandate the use of common data models and controlled vocabularies across all groups.

- Promote a Data Sharing Culture: Recognize and reward researchers who proactively share high-quality data.

Quantitative Framework: Comparing Data Governance Approaches

Selecting an appropriate framework depends on your facility's primary focus. The table below summarizes some of the top frameworks in 2025 to aid in this decision [36].

| Framework Name | Primary Focus | Key Features | Ideal Use Case in Plant Research |

|---|---|---|---|

| DAMA-DMBOK | Comprehensive Data Management | Provides a complete body of knowledge; defines roles & processes. | Enterprise-wide data management foundation. |

| COBIT | IT & Business Alignment | Strong on risk management & audit readiness; integrates with ITIL. | Facilities with complex IT environments and compliance needs. |

| CMMI DMMM | Progressive Maturity Improvement | Focus on continuous improvement; provides a clear maturity roadmap. | Organizations building capabilities gradually. |

| NIST Framework | Security and Privacy | Emphasizes data integrity, security, and privacy risk management. | Managing sensitive pre-publication or IP-related data. |

| FAIR Principles | Data Reusability & Interoperability | Lightweight framework for making data findable and reusable. | Academic & collaborative research projects; open data initiatives. |

| CDMC | Cloud Data Management | Addresses cloud-specific governance like multi-cloud management. | Facilities heavily utilizing cloud platforms for data storage/analysis. |

The Researcher's Toolkit: Essential Reagents for Data Stewardship

Effective data stewardship requires a set of "reagents" – essential tools and resources – to be successful. The following table details key components of this toolkit [43] [37].

| Tool Category | Specific Examples / Standards | Function in the Data Workflow |

|---|---|---|

| Metadata Standards | MIAPPE (Minimal Information About a Plant Phenotyping Experiment) | Provides a standardized checklist for describing phenotyping experiments, ensuring interoperability. |

| Data Repositories | Zenodo, Open Science Framework (OSF), Dryad, FigShare, PGP Repository | Provides a platform for long-term data archiving, sharing, and publication with a persistent identifier. |

| Reference Databases | FAIRsharing, re3data | Registries to find appropriate data standards, policies, and repositories for a given scientific domain. |

| Data Management Tools | ADataViewer, e!DAL | Applications that help in structuring, annotating, and making specific types of data interoperable and reusable. |

| Governance Frameworks | DAMA-DMBOK, FAIR Principles | Provides the overarching structure, policies, and principles for managing data assets throughout their lifecycle. |

Experimental Protocol: A Workflow for Publishing FAIR Plant Data

This protocol provides a detailed, step-by-step methodology for researchers to prepare and publish a dataset at the conclusion of an experiment, ensuring it adheres to FAIR principles.

1. Pre-Publication Data Curation - Action: Combine all raw, processed, and analyzed data related to the experiment into a single, organized project directory. - Quality Control: Perform data validation and cleaning to address inconsistencies or missing values. Document any data transformations.

2. Metadata Annotation - Action: Create a metadata file describing the dataset. Use a standard like MIAPPE for plant phenotyping data. - Required Information: Include experimental design, growth conditions, measurement protocols, data processing steps, and definitions of all column headers in your data files.

3. Identifier Assignment and Repository Submission - Action: Package your data and metadata. Upload to a chosen repository (e.g., Zenodo). - Output: The repository will assign a persistent identifier (e.g., a DOI), which makes your dataset permanently citable.

The workflow for this protocol is visualized below, showing the parallel responsibilities of the researcher and the data steward in this process, a collaboration that is critical for success [38].

Implementing Metadata Management and Data Cataloging for Discoverability

Q: What is the fundamental difference between a data catalog and metadata management? A: Metadata management is the comprehensive strategy governing how you collect, manage, and use metadata (data about data). A data catalog is a specific tool that implements this strategy; it is an organized inventory of data assets that enables search, discovery, and governance. Think of metadata management as the "plan" and the data catalog as the "platform" that executes it [44].

Q: Why are these concepts critical for a modern plant research facility? A: Plant science is generating vast, multi-dimensional datasets from genomics, phenomics, and environmental sensing. Effective metadata management and cataloging transform these raw data into FAIR (Findable, Accessible, Interoperable, and Reusable) assets. This is essential for elucidating complex gene-environment-management (GxExM) interactions and enabling artificial intelligence (AI) applications [23].

Q: What are the main types of metadata we need to manage? A: Metadata can be categorized into three types:

- Physical: Describes the physical storage and format of data (e.g., file location, type, size).

- Logical: Describes the structure and flow of data through systems (e.g., database schemas, data models).

- Conceptual: Describes the business or research context and meaning of data (e.g., protocols, experimental conditions, business rules) [45].

Implementation and Troubleshooting: FAQs

Q: Our researchers use diverse formats. How do we standardize metadata for plant-specific data? A: Leverage community-accepted standards and ontologies. This ensures interoperability and reusability.

- For genomic data: Use standards like FASTA, and submit data to repositories like NCBI that enforce specific metadata requirements.

- For agricultural model variables: Use the ICASA Master Variable list.

- For taxonomy: Use the Integrated Taxonomic Information System (ITIS).

- For geospatial data: Adhere to the ISO 19115 standard, required for all USDA geospatial data [46]. Tools like DataPLAN can guide you in selecting the appropriate standards for your project and funding body [47].

Q: We have a data catalog, but adoption is low. How can we improve usability? A: A data catalog must be more than just a metadata repository. To encourage adoption, ensure your catalog provides:

- Google-like search and discovery for all data assets.

- Data lineage to trace the origin and transformation of data.

- A collaborative environment with features like annotations and shared glossaries.

- Integration with analytical tools used by your researchers [44]. The goal is to create a user-friendly, "one-stop shop" for data that is "understandable, reliable, high-quality, and discoverable" [44].

Q: A key team member left, and critical experimental metadata is missing. How can we prevent this? A: Implement a centralized, institutional metadata management system, such as an electronic lab notebook (ELN) based on an open-source wiki platform (e.g., DokuWiki). This system should capture all experimental details from the start, using predefined, selectable terms for variables and procedures. The core principle is that any piece of metadata is input only once and is immediately accessible to the team, mitigating knowledge loss [48]. Clearly define roles and responsibilities for data management in your Data Management Plan (DMP) to ensure continuity [46].

Q: How do we handle data quality issues from high-volume sources like citizen science platforms or automated phenotyping? A: Proactive data quality frameworks are essential. For instance, when using citizen science data from platforms like iNaturalist, be aware of challenges such as:

- Insufficient photographs for validation.

- Misidentifications.

- Spatial inaccuracies. Mitigation strategies include using only "Research Grade" observations, verifying data with original images, and engaging with the observer community for clarification [49]. For high-throughput phenotyping, implement automated quality control checks and use ML models that are robust to environmental variability [50] [23].

Quantitative Data and Standards

Table 1: Performance Comparison of Deep Learning Models on Plant Disease Detection Datasets

| Model Architecture | Reported Accuracy (Laboratory Conditions) | Reported Accuracy (Field Deployment) | Key Strengths |

|---|---|---|---|

| SWIN (Transformer) | Not Specified | ~88% | Superior robustness and real-world accuracy [50] |

| ResNet50 (CNN) | 95-99% | ~70% | Strong baseline performance, widely adopted [50] |

| Traditional CNN | Not Specified | ~53% | Demonstrates performance gap in challenging conditions [50] |

Table 2: Essential Metadata Standards for Plant Science Research

| Research Domain | Standard or Ontology | Primary Use Case |

|---|---|---|

| Genomics & Bioinformatics | Gene Ontology (GO) | Functional annotation of genes [46] |

| Agricultural Modeling | ICASA Master Variable List | Standardizing variable names for agricultural models [46] |

| Taxonomy | Integrated Taxonomic Information System (ITIS) | Authoritative taxonomic information [46] |

| Geospatial Data | ISO 19115 | Required metadata standard for USDA geospatial data [46] |

| General Data Publication | DataCite | Metadata schema for citing datasets in repositories like Ag Data Commons [46] |

Experimental Protocol: Establishing a Centralized Metadata Management System

Objective: To deploy a centralized, wiki-based metadata management system for a plant research laboratory to enhance rigor, reproducibility, and data discoverability.

Methodology:

System Setup:

- Hardware: Install the system on a Network-Attached Storage (NAS) device within the local lab network for optimal control and accessibility. Example: Synology DS3617xs [48].

- Software: Install DokuWiki, a free and open-source wiki platform, on the NAS. Configure access-control list (ACL) permissions for security [48].

Metadata Schema Design:

- Define Core Entities: Establish and create wiki pages for key experimental concepts:

Subject,Sample,Method,DataFile,Analysis. - Create Data Properties: For each entity, define descriptive properties (e.g., for a

Sample, properties includeSpecies,TissueType,CollectionDate). - Establish Object Properties: Define relationships between entities (e.g.,

Sample Xwasgenerated_usingMethod Y) [48]. - Incorporate Standards: Integrate plant-specific ontologies (e.g., Plant Ontology) and controlled vocabularies into the schema to standardize entries [46] [30].

- Define Core Entities: Establish and create wiki pages for key experimental concepts:

Population and Integration:

- Researchers input metadata from the moment data is gathered, using the predefined terms within the wiki.

- Configure programming software (e.g., R, Python, MATLAB) to directly query the system's underlying database (e.g., sqlite3) during data analysis, automating the linkage between data files and their metadata [48].

Workflow Integration:

- The diagram below illustrates the flow of data and metadata from acquisition through to discovery, enabled by the centralized system.